Excerpt

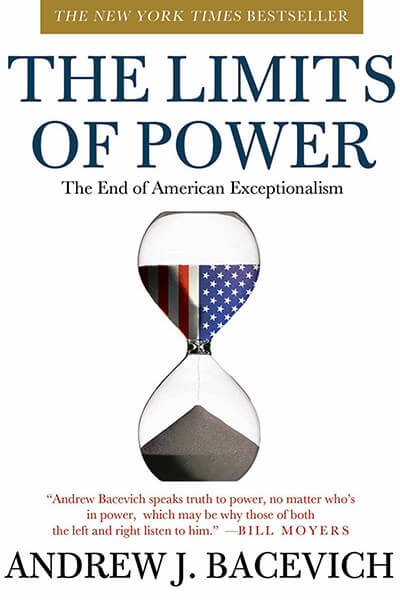

The Limits of Power

The End of American Exceptionalism

by Andrew Bacevich

Excerpt

The Limits of Power

The End of American Exceptionalism

by Andrew Bacevich

1. The Crisis of Profligacy

Today, no less than in 1776, a passion for life, liberty, and the pursuit of happiness remains at the center of America’s civic theology. The Jeffersonian trinity summarizes our common inheritance, defines our aspirations, and provides the touchstone for our influence abroad. Yet if Americans still cherish the sentiments contained in Jefferson’s Declaration of Independence, they have, over time, radically revised their understanding of those “inalienable rights.” Today, individual Americans use their freedom to do many worthy things. Some read, write, paint, sculpt, compose, and play music. Others build, restore, and preserve. Still others attend plays, concerts, and sporting events, visit their local multiplexes, IM each other incessantly, and join “communities” of the like-minded in an ever-growing array of virtual worlds. They also pursue innumerable hobbies, worship, tithe, and, in commendably large numbers, attend to the needs of the less fortunate. Yet none of these in themselves define what it means to be an American in the twenty-first century. If one were to choose a single word to characterize that identity, it would have to be more. For the majority of contemporary Americans, the essence of life, liberty, and the pursuit of happiness centers on a relentless personal quest to acquire, to consume, to indulge, and to shed whatever constraints might interfere with those endeavors. A bumper sticker, a sardonic motto, and a charge dating from the Age of Woodstock have recast the Jeffersonian trinity in modern vernacular: “Whoever dies with the most toys wins”; “Shop till you drop”; “If it feels good, do it.” It would be misleading to suggest that every American has surrendered to this ethic of self-gratification. Resistance to its demands persists and takes many forms. Yet dissenters, intent on curbing the American penchant for consumption and self-indulgence, are fighting a rear-guard action, valiant perhaps but unlikely to reverse the tide. The ethic of self-gratification has firmly entrenched itself as the defining feature of the American way of life. The point is neither to deplore nor to celebrate this fact, but simply to acknowledge it. Others have described, dissected, and typically bemoaned the cultural—and even moral—implications of this development.1 Few, however, have considered how an American preoccupation with “more” has affected U.S. relations with rest of the world. Yet the foreign policy implications of our present-day penchant for consumption and self-indulgence are almost entirely negative. Over the past six decades, efforts to satisfy spiraling consumer demand have given birth to a condition of profound dependency. The United States may still remain the mightiest power the world has ever seen, but the fact is that Americans are no longer masters of their own fate. The ethic of self-gratification threatens the well-being of the United States. It does so not because Americans have lost touch with some mythical Puritan habits of hard work and self-abnegation, but because it saddles us with costly commitments abroad that we are increasingly ill-equipped to sustain while confronting us with dangers to which we have no ready response. As the prerequisites of the American way of life have grown, they have outstripped the means available to satisfy them. Americans of an earlier generation worried about bomber and missile gaps, both of which turned out to be fictitious. The present-day gap between requirements and the means available to satisfy those requirements is neither contrived nor imaginary. It is real and growing. This gap defines the crisis of American profligacy.

Power and Abundance

Placed in historical perspective, the triumph of this ethic of self-gratification hardly qualifies as a surprise. The restless search for a buck and the ruthless elimination of anyone—or anything—standing in the way of doing so have long been central to the American character. Touring the United States in the 1830s, Alexis de Tocqueville, astute observer of the young Republic, noted the “feverish ardor” of its citizens to accumulate. Yet, even as the typical American “clutches at everything,” the Frenchman wrote, “he holds nothing fast, but soon loosens his grasp to pursue fresh gratifications.” However munificent his possessions, the American hungered for more, an obsession that filled him with “anxiety, fear, and regret, and keeps his mind in ceaseless trepidation.”2 Even in de Tocqueville’s day, satisfying such yearnings as well as easing the anxieties and fears they evoked had important policy implications. To quench their ardor, Americans looked abroad, seeking to extend the reach of U.S. power. The pursuit of “fresh gratifications” expressed itself collectively in an urge to expand, territorially and commercially. This expansionist project was already well begun when de Tocqueville’s famed Democracy in America appeared, most notably through Jefferson’s acquisition of the Louisiana territory in 1803 and through ongoing efforts to remove (or simply eliminate) Native Americans, an undertaking that continued throughout the nineteenth century. Preferring to remember their collective story somewhat differently, Americans look to politicians to sanitize their past. When, in his 2005 inaugural address, George W. Bush identified the promulgation of freedom as “the mission that created our nation,” neoconservative hearts certainly beat a little faster, as they undoubtedly did when he went on to declare that America’s “great liberating tradition” now required the United States to devote itself to “ending tyranny in our world.” Yet Bush was simply putting his own gloss on a time-honored conviction ascribing to the United States a uniqueness of character and purpose. From its founding, America has expressed through its behavior and its evolution a providential purpose. Paying homage to, and therefore renewing, this tradition of American exceptionalism has long been one of the presidency’s primary extraconstitutional obligations. Many Americans find such sentiments compelling. Yet to credit the United States with possessing a “liberating tradition” is equivalent to saying that Hollywood has a “tradition of artistic excellence.” The movie business is just that—a business. Its purpose is to make money. If once in a while a studio produces a film of aesthetic value, that may be cause for celebration, but profit, not revealing truth and beauty, defines the purpose of the enterprise. Something of the same can be said of the enterprise launched on July 4, 1776. The hardheaded lawyers, merchants, farmers, and slaveholding plantation owners gathered in Philadelphia that summer did not set out to create a church. They founded a republic. Their purpose was not to save mankind. It was to ensure that people like themselves enjoyed unencumbered access to the Jeffersonian trinity. In the years that followed, the United States achieved remarkable success in making good on those aims. Yet never during the course of America’s transformation from a small power to a great one did the United States exert itself to liberate others—absent an overriding perception that the nation had large security or economic interests at stake. From time to time, although not nearly as frequently as we like to imagine, some of the world’s unfortunates managed as a consequence to escape from bondage. The Civil War did, for instance, produce emancipation. Yet to explain the conflagration of 1861–65 as a response to the plight of enslaved African Americans is to engage at best in an immense oversimplification. Near the end of World War II, GIs did liberate the surviving inmates of Nazi death camps. Yet for those who directed the American war effort of 1941–45, the fate of European Jews never figured as more than an afterthought. Crediting the United States with a “great liberating tradition” distorts the past and obscures the actual motive force behind American politics and U.S. foreign policy. It transforms history into a morality tale, thereby providing a rationale for dodging serious moral analysis. To insist that the liberation of others has never been more than an ancillary motive of U.S. policy is not cynicism; it is a prerequisite to self-understanding. If the young United States had a mission, it was not to liberate but to expand. “Of course,” declared Theodore Roosevelt in 1899, as if explaining the self-evident to the obtuse, “our whole national history has been one of expansion.” TR spoke truthfully. The founders viewed stasis as tantamount to suicide. From the outset, Americans evinced a compulsion to acquire territory and extend their commercial reach abroad. How was expansion achieved? On this point, the historical record leaves no room for debate: by any means necessary. Depending on the circumstances, the United States relied on diplomacy, hard bargaining, bluster, chicanery, intimidation, or naked coercion. We infiltrated land belonging to our neighbors and then brazenly proclaimed it our own. We harassed, filibustered, and, when the situation called for it, launched full-scale invasions. We engaged in ethnic cleansing. At times, we insisted that treaties be considered sacrosanct. On other occasions, we blithely jettisoned solemn agreements that had outlived their usefulness. As the methods employed varied, so too did the rationales offered to justify action. We touted our status as God’s new Chosen People, erecting a “city upon a hill” destined to illuminate the world. We acted at the behest of providential guidance or responded to the urgings of our “manifest destiny.” We declared our obligation to spread the gospel of Jesus Christ or to “uplift little brown brother.” With Woodrow Wilson as our tutor, we shouldered our responsibility to “show the way to the nations of the world how they shall walk in the paths of liberty.”3 Critics who derided these claims as bunkum—the young Lincoln during the war with Mexico, Mark Twain after the imperial adventures of 1898, Senator Robert La Follette amid “the war to end all wars”—scored points but lost the argument. Periodically revised and refurbished, American exceptionalism (which implied exceptional American prerogatives) only gained greater currency. When it came to action rather than talk, even the policy makers viewed as most idealistic remained fixated on one overriding aim: enhancing American influence, wealth, and power. The record of U.S. foreign relations from the earliest colonial encounters with Native Americans to the end of the Cold War is neither uniquely high-minded nor uniquely hypocritical and exploitive. In this sense, the interpretations of America’s past offered by both George W. Bush and Osama bin Laden fall equally wide of the mark. As a rising power, the United States adhered to the iron laws of international politics, which allow little space for altruism. If the tale of American expansion contains a moral theme at all, that theme is necessarily one of ambiguity. To be sure, the ascent of the United States did not occur without missteps: opéra bouffe incursions into Canada; William McKinley’s ill-advised annexation of the Philippines; complicity in China’s “century of humiliation”; disastrous post–World War I economic policies that paved the way for the Great Depression; Harry Truman’s decision in 1950 to send U.S. forces north of Korea’s Thirty-eighth Parallel; among others. Most of these blunders and bonehead moves Americans have long since shrugged off. Some, like Vietnam, we find impossible to forget even as we persistently disregard their implications. However embarrassing, these missteps pale in significance when compared to the masterstrokes of American presidential statecraft. In purchasing Louisiana from the French, Thomas Jefferson may have overstepped the bounds of his authority and in seizing California from Mexico, James Polk may have perpetrated a war of conquest, but their actions ensured that the United States would one day become a great power. To secure the isthmus of Panama, Theodore Roosevelt orchestrated an outrageous swindle. The canal he built there affirmed America’s hemispheric dominion. In collaborating with Joseph Stalin, FDR made common cause with an indisputably evil figure. Yet this pact with the devil destroyed the murderous Hitler while vaulting the United States to a position of unquestioned global economic supremacy. A similar collaboration—forged by Richard Nixon with the murderous Mao Zedong—helped bring down the Soviet empire, thereby elevating the United States to the self-proclaimed status of “sole superpower.” The achievements of these preeminent American statesmen derived not from their common devotion to a liberating tradition but from boldness unburdened by excessive scruples. Notwithstanding the high-sounding pronouncements that routinely emanate from the White House and the State Department, the defining characteristic of U.S. foreign policy at its most successful has not been idealism, but pragmatism, frequently laced with pragmatism’s first cousin, opportunism. What self-congratulatory textbooks once referred to as America’s “rise to power” did not unfold according to some preconceived strategy for global preeminence. There was never a secret blueprint or master plan. A keen eye for the main chance, rather than fixed principles, guided policy. If the means employed were not always pretty, the results achieved were often stunning and paid enormous dividends for the American people. Expansion made the United States the “land of opportunity.” From expansion came abundance. Out of abundance came substantive freedom. Documents drafted in Philadelphia promised liberty. Making good on those promises required a political economy that facilitated the creation of wealth on an enormous scale. Writing over a century ago, the historian Frederick Jackson Turner made the essential point. “Not the Constitution, but free land and an abundance of natural resources open to a fit people,” he wrote, made American democracy possible.4 A half century later, the historian David Potter discovered a similar symbiosis between affluence and liberty. “A politics of abundance,” he claimed, had created the American way of life, “a politics which smiled both on those who valued abundance as a means to safeguard freedom and those who valued freedom as an aid in securing abundance.”5 William Appleman Williams, another historian, found an even tighter correlation. For Americans, he observed, “abundance was freedom and freedom was abundance.”6 In short, expansion fostered prosperity, which in turn created the environment within which Americans pursued their dreams of freedom even as they argued with one another about just who deserved to share in that dream. The promise—and reality—of ever-increasing material abundance kept that argument within bounds. As the Industrial Revolution took hold, Americans came to count on an ever-larger economic pie to anesthetize the unruly and ameliorate tensions related to class, race, religion, and ethnicity. Money became the preferred lubricant for keeping social and political friction within tolerable limits. Americans, Reinhold Niebuhr once observed, “seek a solution for practically every problem of life in quantitative terms,” certain that more is better.7 This reciprocal relationship between expansion, abundance, and freedom reached its apotheosis in the immediate aftermath of World War II. Assisted mightily by the fratricidal behavior of the traditional European powers through two world wars and helped by reckless Japanese policies that culminated in the attack on Pearl Harbor, the United States emerged as a global superpower, while the American people came to enjoy a standard of living that made them the envy of the world. By 1945, the “American Century” forecast by Time-Life publisher Henry Luce only four years earlier seemed miraculously at hand. The United States was the strongest, the richest, and—in the eyes of its white majority at least—the freest nation in all the world. No people in history had ever ascended to such heights. In order to gauge the ensuing descent—when the correlation between expansion, abundance, and freedom diminished—it is useful to recall the advantages the United States had secured. By the end of World War II, the country possessed nearly two-thirds of the world’s gold reserves and more than half its entire manufacturing capacity.8 In 1947, the United States by itself accounted for one-third of world exports.9 Its foreign trade balance was comfortably in the black. As measured by value, its exports more than doubled its imports.10 The dollar had displaced the British pound sterling as the global reserve currency, with the Bretton Woods system, the international monetary regime created in 1944, making the United States the world’s money manager. The country was, of course, a net creditor. Among the world’s producers of oil, steel, airplanes, automobiles, and electronics, it ranked first in each category. “Economically,” wrote the historian Paul Kennedy, “the world was its oyster.”11 And that was only the beginning. Militarily, the United States possessed unquestioned naval and air supremacy, underscored until August 1949 by an absolute nuclear monopoly, affirmed thereafter by a permanent and indisputable edge in military technology. The nation’s immediate neighbors were weak and posed no threat. Its adversaries were far away and possessed limited reach. For the average American household, World War II had finally ended the Depression years. Fears that wartime-stoked prosperity might evaporate with the war itself proved groundless. Instead, the transition to peace touched off an unprecedented economic boom. In 1948, American per capita income exceeded by a factor of four the combined per capita income of Great Britain, France, West Germany, and Italy.12 Wartime economic expansion—the gross national product grew by 60 percent between 1939 and 1945—had actually reduced economic inequality.13 Greater income and pent-up demand now combined to create a huge domestic market that kept American factories humming and produced good jobs. As a consequence, the immediate postwar era became the golden age of the American middle class. Postwar America was no utopia—far from it. Even in a time of bounty, a sizable portion of the population, above all African Americans, did not know either freedom or abundance. Yet lagging only a step or two behind the chronicle of American expansion abroad is a second narrative of expansion, which played itself out at home. The story it tells is one of Americans asserting their claims to full citizenship and making good on those claims so that over time freedom became not the privilege of the few but the birthright of the many. It too is a dramatic tale of achievement overlaid with ambiguity. Who merits the privileges of citizenship? The answer prevailing in 1776—white male freeholders—was never satisfactory. By the stroke of a Jeffersonian pen, the Declaration of Independence had rendered such a narrow definition untenable. Pressures to amend that restricted concept of citizenship emerged almost immediately. Until World War II, progress achieved on this front, though real, was fitful. During the years of the postwar economic boom, and especially during the 1960s, the floodgates opened. Barriers fell. The circle of freedom widened appreciably. The percentage of Americans marginalized as “second-class citizens” dwindled. Many Americans remember the 1960s as the Freedom Decade—and with good cause. Although the modern civil rights movement predates that decade, it was then that the campaign for racial equality achieved its great breakthroughs, beginning in 1963 with the March on Washington and Martin Luther King’s “I Have a Dream” speech. Women and gays followed suit. The founding of the National Organization for Women in 1966 signaled the reinvigoration of the fight for women’s rights. In 1969, the Stonewall Uprising in New York City launched the gay rights movement. Political credit for this achievement lies squarely with the Left. Abundance, sustained in no small measure by a postwar presumption of American “global leadership,” made possible the expansion of freedom at home. Rebutting Soviet charges of racism and hypocrisy lent the promotion of freedom domestically a strategic dimension. Yet possibility only became reality thanks to progressive political activism. Pick the group: blacks, Jews, women, Asians, Hispanics, working stiffs, gays, the handicapped—in every case, the impetus for providing equal access to the rights guaranteed by the Constitution originated among pinks, lefties, liberals, and bleeding-heart fellow travelers. When it came to ensuring that every American should get a fair shake, the contribution of modern conservatism has been essentially nil. Had Martin Luther King counted on William F. Buckley and the National Review to take up the fight against racial segregation in the 1950s and 1960s, Jim Crow would still be alive and well. Granting the traditionally marginalized access to freedom constitutes a central theme of American politics since World War II. It does not diminish the credit due to those who engineered this achievement to note that their success stemmed, in part, from the fact that the United States was simultaneously asserting its claim to unquestioned global preeminence. From World War II into the 1960s, more power abroad meant greater abundance at home, which, in turn, paved the way for greater freedom. The reformers who pushed and prodded for racial equality and women’s rights did so in tacit alliance with the officials presiding over the postwar rehabilitation of Germany and Japan, with oil executives pressing to bring the Persian Gulf into America’s sphere of influence, and with defense contractors urging the procurement of expensive new weaponry. The creation, by the 1950s, of an informal American empire of global proportions was not the result of a conspiracy designed to benefit the few. Postwar foreign policy derived its legitimacy from a widely shared perception that power was being exercised abroad to facilitate the creation of a more perfect union at home. In this sense, General Curtis LeMay’s nuclear strike force, the Strategic Air Command (SAC)—as a manifestation of American might as well as a central component of the postwar military-industrial complex—helped foster the conditions from which Betty Friedan’s National Organization for Women emerged. A proper understanding of contemporary history means acknowledging an ironic kinship between hard-bitten Cold Warriors like General LeMay and left-leaning feminists like Ms. Friedan. SAC helped make possible the feminine mystique and much else besides.

Not Less, But More

The two decades immediately following World War II marked the zenith of what the historian Charles Maier called “the Empire of Production.”14 During these years, unquestioned economic superiority endowed the United States with a high level of strategic self-sufficiency, translating in turn into remarkable freedom of action. In his Farewell Address, George Washington had dreamed of the day when the United States might acquire the strength sufficient “to give it, humanly speaking, the command of its own fortunes.” Strength, the first president believed, would allow the nation to assert real independence, enabling Americans to “choose peace or war, as [their] interest, guided by justice, shall counsel.” In the wake of World War II, that moment had emphatically arrived. It soon passed. Even before 1950, the United States had begun to import foreign oil. At first, the quantities were trifling. Over time, they grew. Here was the canary in the economic mineshaft. Yet for two decades no one paid it much attention. The empire of production continued churning out a never-ending array of goods, its preeminence seemingly permanent and beyond challenge. In Europe and East Asia, the United States showed commendable shrewdness in converting economic superiority into strategic advantage. In the twenty years following VJ Day, wrote Maier, “Americans traded wealth for preponderance,” providing assistance to rebuild shattered economies in Western Europe and East Asia and opening up the U.S. market to their products.15 America’s postwar status as leader of the free world was bought and paid for in Washington. In the 1960s, however, the empire of production began to come undone. Within another twenty years—thanks to permanently negative trade balances, a crushing defeat in Vietnam, oil shocks, “stagflation,” and the shredding of a moral consensus that could not withstand the successive assaults of Elvis Presley, “the pill,” and the counterculture, along with news reports that God had died—it had become defunct. In its place, according to Maier, there emerged a new “Empire of Consumption.” Just as the lunch-bucket-toting factory worker had symbolized the empire of production during its heyday, the teenager, daddy’s credit card in her blue jeans and headed to the mall, now emerged as the empire of consumption’s emblematic figure. The evil genius of the empire of production was Henry Ford. In the empire of consumption, Ford’s counterpart was Walt Disney. We can fix the tipping point with precision. It occurred between 1965, when President Lyndon Baines Johnson ordered U.S. combat troops to South Vietnam, and 1973, when President Richard M. Nixon finally ended direct U.S. involvement in that war. Prior to the Vietnam War, efforts to expand American power in order to promote American abundance usually proved conducive to American freedom. After Vietnam, efforts to expand American power continued; but when it came to either abundance or freedom, the results became increasingly problematic. In retrospect, the economic indicators signaling an erosion of dominance seem obvious. The costs of the Vietnam War—and President Johnson’s attempt to conceal them while pursuing his vision of a Great Society—destabilized the economy, as evidenced by deficits, inflation, and a weakening dollar. In August 1971, Nixon tacitly acknowledged the disarray into which the economy had fallen by devaluing the dollar and suspending its convertibility into gold. That, of course, was only the beginning. Prior to the 1970s, because the United States had long been the world’s number one producer of petroleum, American oil companies determined the global price of oil. In 1972, domestic oil production peaked and then began its inexorable, irreversible decline.16 The year before, the prerogative of setting the price of crude oil had passed into the hands of a new producers’ group, the Organization of the Petroleum Exporting Countries (OPEC).17 With U.S. demand for oil steadily increasing, so, too, did overall American reliance on imports. Simultaneously, a shift in the overall terms of trade occurred. In 1971, after decades in the black, the United States had a negative trade balance. In 1973, and again in 1975, exports exceeded imports in value. From then on, it was all red ink; never again would American exports equal imports. In fact, the gap between the two grew at an ever-accelerating rate year by year.18 For the American public, the clearest and most painful affirmation of the nation’s sudden economic vulnerability came with the “oil shock” of 1973, which produced a 40 percent spike in gas prices, long lines at filling stations, and painful shortages. By the late 1970s, a period of slow growth and high inflation, the still-forming crisis of profligacy was already causing real distress in American households. The first protracted economic downturn since World War II confronted Americans with a fundamental choice. They could curb their appetites and learn to live within their means or deploy dwindling reserves of U.S. power in hopes of obliging others to accommodate their penchant for conspicuous consumption. Between July 1979 and March 1983, a fateful interval bookended by two memorable presidential speeches, they opted decisively for the latter. Here lies the true pivot of contemporary American history, far more relevant to our present predicament than supposedly decisive events like the fall of the Berlin Wall or the collapse of the Soviet Union. Between the summer of 1979 and the spring of 1983, “global leadership,” the signature claim of U.S. foreign policy, underwent a subtle transformation. Although the United States kept up the pretense that the rest of the world could not manage without its guidance and protection, leadership became less a choice than an imperative. The exercise of global primacy offered a way of compensating for the erosion of a previously dominant economic position. Yet whatever deference Washington was able to command could not conceal the extent to which the United States itself was becoming increasingly beholden to others. Leadership now carried connotations of dependence. On July 15, 1979, Jimmy Carter delivered the first of those two pivotal speeches. Although widely regarded in our own day as a failed, even a hapless, president, Carter, in this instance at least, demonstrated remarkable foresight. He not only appreciated the looming implications of dependence but also anticipated the consequences of allowing this condition to fester. The circumstances for Carter’s speech were less than congenial. In the summer of 1979, popular dissatisfaction with his presidency was growing at an alarming rate. The economy was in terrible shape. Inflation had reached 11 percent. Seven percent of American workers were unemployed. The prime lending rate stood at 15 percent and was still rising. By postwar standards, all of these figures were unacceptably high, if not unprecedented. Worse yet, in January 1979, Iranian revolutionaries ousted the shah of Iran, a longtime U.S. ally, resulting in a second “oil shock.” Gasoline prices in the United States soared, due not to actual shortages but to panic buying. The presidential election season beckoned. If Carter hoped to win a second term, he needed to turn things around quickly. The president had originally intended to speak on July 5, focusing his address exclusively on energy. At the last minute, he decided to postpone it. Instead, he spent ten days sequestered at Camp David, using the time, he explained, “to reach out and listen to the voices of America.” At his invitation, a host of politicians, academics, business and labor leaders, clergy, and private citizens trooped through the presidential retreat to offer their views on what was wrong with America and what Carter needed to do to set things right. The result combined a seminar of sorts with an exercise in self-flagellation. The speech that Carter delivered when he returned to the White House bore little resemblance to the one he had planned to give ten days earlier. He began by explaining that he had decided to look beyond energy because “the true problems of our Nation are much deeper.” The energy crisis of 1979, he suggested, was merely a symptom of a far greater crisis. “So, I want to speak to you first tonight about a subject even more serious than energy or inflation. I want to talk to you right now about a fundamental threat to American democracy.” In short order, Carter then proceeded to kill any chance he had of securing reelection. In American political discourse, fundamental threats are by definition external. Nazi Germany, Imperial Japan, or international communism could threaten the United States. That very year, Iran’s Islamic revolutionaries had emerged to pose another such threat. That the actions of everyday Americans might pose a comparable threat amounted to rank heresy. Yet Carter now dared to suggest that the real danger to American democracy lay within. The nation as a whole was experiencing “a crisis of confidence,” he announced. “It is a crisis that strikes at the very heart and soul and spirit of our national will. We can see this crisis in the growing doubt about the meaning of our own lives and in the loss of a unity of purpose for our nation.” This erosion of confidence threatened “to destroy the social and the political fabric of America.” Americans had strayed from the path of righteousness. “In a nation that was proud of hard work, strong families, close-knit communities, and our faith in God,” the president continued,

too many of us now tend to worship self-indulgence and consumption. Human identity is no longer defined by what one does, but by what one owns. But we’ve discovered that owning things and consuming things does not satisfy our longing for meaning. We’ve learned that piling up material goods cannot fill the emptiness of lives which have no confidence or purpose.

In other words, the spreading American crisis of confidence was an outward manifestation of an underlying crisis of values. With his references to what “we’ve discovered” and what “we’ve learned,” Carter implied that he was merely voicing concerns that his listeners already shared: that average Americans viewed their lives as empty, unsatisfying rituals of buying, and longed for something more meaningful. To expect Washington to address these concerns was, he made clear, fanciful. According to the president, the federal government had become “an island,” isolated from the people. Its major institutions were paralyzed and corrupt. It was “a system of government that seems incapable of action.” Carter spoke of “a Congress twisted and pulled in every direction by hundreds of well financed and powerful special interests.” Partisanship routinely trumped any concern for the common good: “You see every extreme position defended to the last vote, almost to the last breath by one unyielding group or another.” “We are at a turning point in our history,” Carter announced.

There are two paths to choose. One is a path I’ve warned about tonight, the path that leads to fragmentation and self-interest. Down that road lies a mistaken idea of freedom, the right to grasp for ourselves some advantage over others. That path would be one of constant conflict between narrow interests ending in chaos and immobility.

The continued pursuit of this mistaken idea of freedom was “a certain route to failure.” The alternative—a course consistent with “all the traditions of our past [and] all the lessons of our heritage”—pointed down “another path, the path of common purpose and the restoration of American values.” Down that path, the president claimed, lay “true freedom for our Nation and ourselves.” As portrayed by Carter, the mistaken idea of freedom was quantitative: It centered on the never-ending quest for more while exalting narrow self-interest. His conception of authentic freedom was qualitative: It meant living in accordance with permanent values. At least by implication, it meant settling for less. How Americans dealt with the question of energy, the president believed, was likely to determine which idea of freedom would prevail. “Energy will be the immediate test of our ability to unite this Nation, and it can also be the standard around which we rally.” By raising that standard, Carter insisted, “we can seize control again of our common destiny.” With this in mind, Carter outlined a six-point program designed to end what he called “this intolerable dependence on foreign oil.” He promised action to reduce oil imports by one-half within a decade. In the near term, he vowed to establish quotas capping the amount of oil coming into the country. He called for a national effort to develop alternative energy sources. He proposed legislation mandating reductions in the amount of oil used for power generation. He advocated establishment of a new federal agency “to cut through the red tape, the delays, and the endless roadblocks to completing key energy projects.” And finally, he summoned the American people to conserve: “to take no unnecessary trips, to use carpools or public transportation whenever you can, to park your car one extra day per week, to obey the speed limit, and to set your thermostats to save fuel.” Although Carter expressed confidence that the United States could one day regain its energy independence, he acknowledged that in the near term “there [was] simply no way to avoid sacrifice.” Indeed, implicit in Carter’s speech was the suggestion that sacrifice just might be a good thing. For the sinner, some sort of penance must necessarily precede redemption. The response to his address—instantly labeled the “malaise” speech although Carter never used that word—was tepid at best. Carter’s remarks had blended religiosity and populism in ways that some found off-putting. Writing in the New York Times, Francis X. Clines called it the “cross-of-malaise” speech, comparing it unfavorably to the famous “cross-of-gold” oration that had vaulted William Jennings Bryan to political prominence many decades earlier.19 Others criticized what they saw as a penchant for anguished moralizing and a tendency to find fault everywhere except in his own White House. In the New York Times Magazine, Professor Eugene Kennedy mocked “Carter Agonistes,” depicting the president as a “distressed angel, passing judgment on us all, and speaking solemnly not of blood and sweat but of oil and sin.”20 As an effort to reorient public policy, Carter’s appeal failed completely. Americans showed little enthusiasm for the president’s brand of freedom with its connotations of virtuous austerity. Presented with an alternative to quantitative solutions, to the search for “more,” they declined the offer. Not liking the message, Americans shot the messenger. Given the choice, more still looked better. Carter’s crisis-of-confidence speech did enjoy a long and fruitful life—chiefly as fodder for his political opponents. The most formidable of them, already the front-runner for the 1980 Republican nomination, was Ronald Reagan, the former governor of California. Reagan portrayed himself as conservative. He was, in fact, the modern prophet of profligacy, the politician who gave moral sanction to the empire of consumption. Beguiling his fellow citizens with his talk of “morning in America,” the faux-conservative Reagan added to America’s civic religion two crucial beliefs: Credit has no limits, and the bills will never come due. Balance the books, pay as you go, save for a rainy day—Reagan’s abrogation of these ancient bits of folk wisdom did as much to recast America’s moral constitution as did sex, drugs, and rock and roll. Reagan offered his preliminary response to Carter on November 13, 1979, the day he officially declared himself a candidate for the presidency. When it came to confidence, the former governor wanted it known that he had lots of it. In a jab at Carter, he alluded to those “who would have us believe that the United States, like other great civilizations of the past, has reached the zenith of its power” and who “tell us we must learn to live with less.” Reagan rejected these propositions. He envisioned a future in which the United States would gain even greater power while Americans would enjoy ever greater prosperity, the one reinforcing the other. The sole obstacle to all this was the federal government, which he characterized as inept, arrogant, and confiscatory. His proposed solution was to pare down the bureaucracy, reduce federal spending, and cut taxes. If there was an energy crisis, that too—he made clear—was the government’s fault. On one point at least, Reagan agreed with Carter: “The only way to free ourselves from the monopoly pricing power of OPEC is to be less dependent on outside sources of fuel.” Yet Reagan had no interest in promoting energy independence through reduced consumption. “The answer, obvious to anyone except those in the administration it seems, is more domestic production of oil and gas.” When it came to energy, he was insistent: “We must decide that ‘less’ is not enough.” History remembers Reagan as a fervent Cold Warrior. Yet, in announcing his candidacy, he devoted remarkably little attention to the Soviet Union. In referring to the Kremlin, his language was measured, not belligerent. He did not denounce the Soviets for being “evil.” He made no allusions to rolling back communism. He offered no tribute to the American soldier standing guard on freedom’s frontiers. He said nothing about an urgent need to rebuild America’s defenses. In outlining his views on foreign policy, he focused primarily on his vision of a “North American accord,” an economic union linking the United States, Canada, and Mexico. “It is time we stopped thinking of our nearest neighbors as foreigners,” he declared. As was so often the case, Reagan laid on enough frosting to compensate for any shortcomings in the cake. In his peroration, he approvingly quoted Tom Paine on Americans having the power to “begin the world over again.” He endorsed John Winthrop’s charge that God had commanded Americans to erect “a city upon a hill.” And he cited (without attribution) Franklin D. Roosevelt’s entreaty for the present generation of Americans to keep their “rendezvous with destiny.” For Reagan, the arc of America’s future, like the arc of the American past (at least as he remembered it), pointed ever upward. Overall, it was a bravura performance. And it worked. No doubt Reagan spoke from the heart, but his real gift was a canny knack for telling Americans what most of them wanted to hear. As a candidate for the White House, Reagan did not call on Americans to tighten their belts, make do, or settle for less. He saw no need for sacrifice or self-denial. He rejected as false Carter’s dichotomy between quantity and quality. Above all, he assured his countrymen that they could have more. Throughout his campaign, this remained a key theme. The contest itself took place against the backdrop of the ongoing hostage crisis that saw several dozen American diplomats and soldiers held prisoner in Iran. Here was unmistakable evidence of what happened when the United States hesitated to assert itself in this part of the world. The lesson seemed clear: If developments in the Persian Gulf could adversely affect the American standard of living, then control of that region by anyone other than the United States had become intolerable. Carter himself was the first to make this point, when he enunciated the Carter Doctrine in January 1980, vowing to use “any means necessary, including military force,” to prevent a hostile power from dominating the region. Carter’s eleventh-hour pugnacity came too late to save his presidency. The election of 1980 reaffirmed a continuing American preference for quantitative solutions. Despite the advantages of incumbency, Carter suffered a crushing defeat. Reagan carried all but four states and won the popular vote by well over eight million. It was a landslide and a portent. On January 20, 1981, Ronald Reagan became president. His inaugural address served as an occasion to recite various conservative bromides. Reagan made a show of decrying the profligacy of the recent past. “For decades we have piled deficit upon deficit, mortgaging our future and our children’s future for the temporary convenience of the present. To continue this long trend is to guarantee tremendous social, cultural, political, and economic upheavals.” He vowed to put America’s economic house in order. “You and I, as individuals, can, by borrowing, live beyond our means, but for only a limited period of time. Why, then, should we think that collectively, as a nation, we’re not bound by that same limitation?” Reagan reiterated an oft-made promise “to check and reverse the growth of government.” He would do none of these things. In each case, in fact, he did just the reverse. During the Carter years, the federal deficit had averaged $54.5 billion annually. During the Reagan era, deficits skyrocketed, averaging $210.6 billion over the course of Reagan’s two terms in office. Overall federal spending nearly doubled, from $590.9 billion in 1980 to $1.14 trillion in 1989.21 The federal government did not shrink. It grew, the bureaucracy swelling by nearly 5 percent while Reagan occupied the White House.22 Although his supporters had promised that he would shut down extraneous government programs and agencies, that turned out to be just so much hot air. To call Reagan a phony or a hypocrite is to miss the point. The Reagan Revolution over which he presided was never about fiscal responsibility or small government. The object of the exercise was to give the American people what they wanted, that being the essential precondition for winning reelection in 1984 and consolidating Republican control in Washington. Far more accurately than Jimmy Carter, Reagan understood what made Americans tick: They wanted self-gratification, not self-denial. Although always careful to embroider his speeches with inspirational homilies and testimonials to old-fashioned virtues, Reagan mainly indulged American self-indulgence. Reagan’s two terms in office became an era of gaudy prosperity and excess. Tax cuts and the largest increase to date in peacetime military spending formed the twin centerpieces of Reagan’s economic policy, the former justified by theories of supply-side economics, the latter by the perceived imperative of responding to a Soviet arms buildup and Soviet adventurism. Declaring that “defense is not a budget item,” Reagan severed the connection between military spending and all other fiscal or political considerations—a proposition revived by George W. Bush after September 2001. None of this is to suggest that claims of a Reagan Revolution were fraudulent. There was a revolution; it just had little to do with the advertised tenets of conservatism. The true nature of the revolution becomes apparent only in retrospect. Reagan unveiled it in remarks that he made on March 23, 1983, a speech in which the president definitively spelled out his alternative to Carter’s road not taken. History remembers this as the occasion when the president announced his Strategic Defense Initiative—a futuristic “impermeable” antimissile shield intended to make nuclear weapons “impotent and obsolete.” Critics derisively dubbed his proposal “Star Wars,” a label the president, in the end, embraced. (“If you will pardon my stealing a film line—the Force is with us.”) Yet embedded in Reagan’s remarks were two decidedly radical propositions: first, that the minimum requirements of U.S. security now required the United States to achieve a status akin to invulnerability; and second, that modern technology was bringing this seemingly utopian goal within reach. Star Wars, in short, introduced into mainstream politics the proposition that Americans could be truly safe only if the United States enjoyed something akin to permanent global military supremacy. Here was Reagan’s preferred response to the crisis that Jimmy Carter had identified in July 1979. Here, too, can be found the strategic underpinnings of George W. Bush’s post-9/11 global war on terror. SDI prefigured the GWOT, both resting on the assumption that military power offered an antidote to the uncertainties and anxieties of living in a world not run entirely in accordance with American preferences. Whereas President Carter had summoned Americans to mend their ways, which implied a need for critical self-awareness, President Reagan obviated any need for soul-searching by simply inviting his fellow citizens to carry on. For Carter, ending American dependence on foreign oil meant promoting moral renewal at home. Reagan—and Reagan’s successors—mimicked Carter in bemoaning the nation’s growing energy dependence. In practice, however, they did next to nothing to curtail that dependence. Instead, they wielded U.S. military power to ensure access to oil, hoping thereby to prolong the empire of consumption’s lease on life. Carter had portrayed quantity (the American preoccupation with what he had called “piling up material goods”) as fundamentally at odds with quality (authentic freedom as he defined it). Reagan reconciled what was, to Carter, increasingly irreconcilable. In Reagan’s view, quality (advanced technology converted to military use by talented, highly skilled soldiers) could sustain quantity (a consumer economy based on the availability of cheap credit and cheap oil). Pledges of benign intent concealed the full implications of Star Wars. To skeptics—nuclear strategists worried that the pursuit of strategic defenses might prove “destabilizing”—Reagan offered categorical assurances. “The defense policy of the United States is based on a simple premise: The United States does not start fights. We will never be an aggressor. We maintain our strength in order to deter and defend against aggression—to preserve freedom and peace.” According to Reagan, the employment of U.S. forces for anything but defensive purposes was simply inconceivable. “Every item in our defense program—our ships, our tanks, our planes, our funds for training and spare parts—is intended for one all-important purpose: to keep the peace.” Reinhold Niebuhr once observed that “the most significant moral characteristic of a nation is its hypocrisy.”23 In international politics, the chief danger of hypocrisy is that it inhibits self-understanding. The hypocrite ends up fooling mainly himself. Whether or not, in 1983, Ronald Reagan sincerely believed that “the United States does not start fights” and by its nature could not commit acts of aggression is impossible to say. He would hardly have been the first politician who came to believe what it was expedient for him to believe. What we can say with certainty is that events in our own time, most notably the Iraq War, have refuted Reagan’s assurances, with fateful consequences. Illusions about military power first fostered by Reagan outlived his presidency. Unambiguous global military supremacy became a standing aspiration; for the Pentagon, anything less than unquestioned dominance now qualified as dangerously inadequate. By the 1990s, the conviction that advanced technology held the key to unlocking hitherto undreamed-of military capabilities had moved from the heavens to the earth. A new national security consensus emerged based on the conviction that the United States military could dominate the planet as Reagan had proposed to dominate outer space. In Washington, confidence that a high-quality military establishment, dexterously employed, could enable the United States, always with high-minded intentions, to organize the world to its liking had essentially become a self-evident truth. In this malignant expectation—not in any of the conservative ideals for which he is retrospectively venerated—lies the essence of the Reagan legacy.

Taking the Plunge

Just beneath the glitter of the Reagan years, the economic position of the United States continued to deteriorate. Despite the president’s promise to restore energy independence, reliance on imported oil soared. By the end of Reagan’s presidency, 41 percent of the oil consumed domestically came from abroad. It was during his first term that growing demand for Chinese goods produced the first negative trade balance with that country. In the same period, Washington—and the American people more generally—resorted to borrowing. Through the 1970s, economic growth had enabled the United States to reduce the size of a national debt (largely accrued during World War II) relative to the overall gross national product (GNP). At the beginning of the Reagan presidency, that ratio stood at a relatively modest 31.5 percent of GNP, the lowest since 1931. Reagan’s huge deficits reversed that trend. The United States had long touted its status as a creditor nation as a symbol of overall economic strength. That, too, ended in the Reagan era. In 1986, the net international investment position of the United States turned negative as U.S. assets owned by foreigners exceeded the assets that Americans owned abroad. The imbalance has continued to grow ever since.24 Even as the United States began accumulating trillions of dollars of debt, the inclination of individual Americans to save began to disappear. For most of the postwar era, personal savings had averaged a robust 8–10 percent of disposable income. In 1985, that figure began a gradual slide toward zero.25 Simultaneously, consumer debt increased, so that by the end of the century household debt exceeded household income.26 American profligacy during the 1980s had a powerful effect on foreign policy. The impact manifested itself in two ways. On the one hand, Reagan’s willingness to spend without limit helped bring the Cold War to a peaceful conclusion. On the other hand, American habits of conspicuous consumption, encouraged by Reagan, drew the United States ever more deeply into the vortex of the Islamic world, saddling an increasingly debt-ridden and energy-dependent nation with commitments that it could neither shed nor sustain. By expending huge sums on an arsenal of high-tech weapons, Reagan nudged the Kremlin toward the realization that the Soviet Union could no longer compete with the West. By doing nothing to check the country’s reliance on foreign oil, he laid a trap into which his successors would stumble. If Reagan deserves plaudits for the former, he also deserves to be held accountable for the latter. Yet it would be a mistake to imply that there were two Reagans—the one a farsighted statesman who won the Cold War, the other a chucklehead who bollixed up U.S. relations with the Islamic world. Cold War policy and Middle Eastern policy did not exist in separate compartments; they were intimately, if perversely, connected. To employ the formulation preferred by Norman Podhoretz and other neoconservatives—viewing the Cold War and the global war on terror as successors to World Wars I and II—Reagan-era exertions undertaken to win “World War III” inadvertently paved the way for “World War IV,” while leaving the United States in an appreciably weaker position to conduct that struggle. The relationship between World Wars III and IV becomes apparent when recalling Reagan’s policy toward Afghanistan and Iraq—the former a seemingly brilliant success that within a decade gave birth to a quagmire, the latter a cynical gambit that backfired, touching off a sequence of events that would culminate in a stupendous disaster. As noted in the final report of the National Commission on Terrorist Attacks Upon the United States, “A decade of conflict in Afghanistan, from 1979 to 1989, gave Islamist extremists a rallying point and a training field.”27 The commissioners understate the case. In Afghanistan, jihadists took on a superpower, the Soviet Union, and won. They gained immeasurably in confidence and ambition, their efforts funded in large measure by the American taxpayer. The billions that Reagan spent funneling weapons, ammunition, and other support to the Afghan mujahideen were as nothing compared to the $1.2 trillion his administration expended modernizing U.S. military forces. Yet American policy in Afghanistan during the 1980s illustrates the Reagan Doctrine in its purest form. In the eyes of Reagan’s admirers, it was his masterstroke, a bold and successful effort to roll back the Soviet empire. The exploits of the Afghan “resistance” fired the president’s imagination, and he offered the jihadists unstinting and enthusiastic support. In designating March 21, 1982, “Afghanistan Day,” for example, Reagan proclaimed, “The freedom fighters of Afghanistan are defending principles of independence and freedom that form the basis of global security and stability.”28 In point of fact, these “freedom fighters” had no interest at all in global security and stability. Reagan’s depiction of their aims inverted the truth, as events soon demonstrated. Once the Soviets departed from Afghanistan, a vicious civil war ensued with radical Islamists—the Taliban—eventually emerging victorious. The Taliban, in turn, provided sanctuary to Al Qaeda. From Taliban-controlled Afghanistan, Osama bin Laden plotted his holy war against the United States. After the attacks of September 11, 2001, the United States rediscovered Afghanistan, overthrew the Taliban, and then stayed on, intent on creating a state aligned with the West. A mere dozen years after the Kremlin had thrown in the towel, U.S. troops found themselves in a position not unlike that of Soviet soldiers in the 1980s—outsiders attempting to impose a political order on a fractious population animated by an almost pathological antipathy toward foreign occupiers. As long as it had remained, however tenuously, within the Kremlin’s sphere of influence, Afghanistan posed no threat to the United States, just as, before 1980, the five Central Asian republics of the Soviet Union, forming a crescent north of Afghanistan, hardly registered on the Pentagon’s meter of strategic priorities (or American consciousness). Once the Soviets were ousted from Afghanistan in 1989 and the Soviet Union itself collapsed two years later, all that began to change. In the wake of the 9/11 attacks, planned in Afghanistan, all of “Central Asia suddenly became valuable real estate to the United States.”29 In a sort of reverse domino theory, the importance now attributed to Afghanistan increased the importance of the entire region. After September 2001, U.S. officials and analysts began using terms like strategic, crucial, and critical when referring to Central Asia. Here was yet another large swath of territory to which American interests obliged the United States to attend. So the ripples caused by Reagan’s Afghanistan policy continued to spread. Distracted by the “big war” in Iraq and the “lesser war” in Afghanistan, Americans have paid remarkably little attention to this story. Yet the evolution of military policy in relation to the “stans” of the former Soviet Union nicely illustrates the extent to which a foreign policy tradition of reflexive expansionism remains alive, long after it has ceased to make sense. In the Clinton years, the Pentagon had already begun to express interest in Central Asia, conducting “peacekeeping exercises” in the former Soviet republics and establishing military-to-military exchange programs. In 2001, in conjunction with the U.S. invasion of Afghanistan, the Bush administration launched far more intensive efforts to carve out a foothold for American power across Central Asia. Pentagon initiatives in the region still fall under the official rubric of engagement. This anodyne term encompasses a panoply of activities that, since 2001, have included recurring training missions, exercises, and war games; routine visits to the region by senior military officers and Defense Department civilians; and generous “security assistance” subsidies to train and equip local military forces. The purpose of engagement is to increase U.S. influence, especially over regional security establishments, facilitating access to the region by U.S. forces and thereby laying the groundwork for future interventions. With an eye toward the latter, the Pentagon has negotiated overflight rights as well as securing permission to use local facilities in several Central Asian republics. At Manas in Kyrgyzstan, the United States maintains a permanent air base, established in December 2001. The U.S. military presence in Central Asia is a work in progress. Along with successes, there have been setbacks, to include being thrown out of Uzbekistan. Yet as analysts discuss next steps, the terms of the debate are telling. Disagreement may exist on the optimum size of the U.S. “footprint” in the region, but a consensus has formed in Washington around the proposition that some long-term presence is essential. Observers debate the relative merits of permanent bases versus “semiwarm” facilities, but they take it as a given that U.S. forces require the ability to operate throughout the area. We’ve been down this path before. After liberating Cuba in 1898 and converting it into a protectorate, the United States set out to transform the entire Caribbean into an “American lake.” Just as, a century ago, senior U.S. officials proclaimed their concern for the well-being of Haitians, Dominicans, and Nicaraguans, so do senior U.S. officials now insist on their commitment to “economic reform, democratic reform, and human rights” for all Central Asians.30 But this is mere camouflage. The truth is that the United States is engaged in an effort to incorporate Central Asia into the Pax Americana. Whereas expansion into the Caribbean a century ago paid economic dividends as well as enhancing U.S. security, expansion into Central Asia is unlikely to produce comparable benefits. It will cost far more than it will ever return. American officials may no longer refer to Afghan warlords and insurgents as “freedom fighters”; yet, to a very large degree, U.S. and NATO forces in present-day Afghanistan are fighting the offspring of the jihadists that Reagan so lavishly supported in the 1980s. Preferring to compartmentalize history into pre-9/11 and post-9/11 segments, Americans remain oblivious to the consequences that grew out of Ronald Reagan’s collaboration with the mujahideen. Seldom has a seemingly successful partnership so quickly yielded poisonous fruit. In retrospect, the results achieved by liberating Afghanistan in 1989 serve as a cosmic affirmation of Reinhold Niebuhr’s entire worldview. Of Afghanistan in the wake of the Soviet withdrawal, truly it can be said, as he wrote long ago, “The paths of progress . . . proved to be more devious and unpredictable than the putative managers of history could understand.”31 When it came to the Persian Gulf, Reagan’s profligacy took a different form. Far more than any of his predecessors, Reagan led the United States down the road to Persian Gulf perdition. History will hold George W. Bush primarily accountable for the disastrous Iraq War of 2003. But if that war had a godfather, it was Ronald Reagan. Committed to quantitative solutions, Reagan never questioned the proposition that the American way of life required ever-larger quantities of energy, especially oil. Since satisfying American demand by expanding domestic oil production was never anything but a mirage, Reagan instead crafted policies intended to alleviate the risks associated with dependency. To prevent any recurrence of the oil shocks of 1973 and 1979, he put in motion efforts to secure U.S. dominion over the Persian Gulf. A much-hyped but actually receding Soviet threat provided the rationale for the Reagan military buildup of the 1980s. Ironically, however, the splendid army that Reagan helped create found eventual employment not in defending the West against totalitarianism but in vainly trying to impose an American imperium on the Persian Gulf. To credit Reagan with having conceived a full-fledged Persian Gulf strategy would go too far. Indeed, his administration’s immediate response to various crises roiling the region produced a stew of incoherence. In Lebanon, he flung away the lives of 241 marines in a 1983 mission that still defies explanation. The alacrity with which he withdrew U.S. forces from Beirut after a suicide truck bomb had leveled a marine barracks there suggests that there really was no mission at all. Yet Reagan’s failed intervention in Lebanon seems positively logical compared to the contradictions riddling his policies toward Iran and Iraq. Saddam Hussein’s invasion of Iran touched off a brutal war that spanned Reagan’s presidency. When the Islamic republic seemed likely to win that conflict, Reagan famously “tilted” in favor of Iraq, providing intelligence, loan guarantees, and other support while turning a blind eye to Saddam’s crimes. American assistance to Iraq did not enable Saddam to defeat Iran; it just kept the war going. At about the same time, in what became known as the Iran-Contra Affair, White House operatives secretly and illegally provided weapons to Saddam’s enemies, the ayatollahs ruling Iran, who were otherwise said to represent a dire threat to U.S. national security. For all the attention they eventually attracted, these misadventures served primarily to divert attention from the central thrust of Reagan’s Persian Gulf policy. The story lurking behind the headlines was one of strategic reorientation: During the 1980s, the Pentagon began gearing up for large-scale and sustained military operations in the region. This reorientation actually began in the waning days of the Carter administration, when President Carter publicly declared control of the Persian Gulf to be a vital interest. Not since the Tonkin Gulf Resolution has a major statement of policy been the source of greater mischief. Yet for Reagan and for each of his successors, the Carter Doctrine has remained a sacred text, never questioned, never subject to reassessment. As such, it has provided the overarching rationale for nearly thirty years of ever-intensifying military activism in the Persian Gulf. Even so, when Reagan succeeded Carter in January 1981, U.S. forces possessed only the most rudimentary ability to intervene in the Gulf. By the time he left office eight years later, he had positioned the United States to assert explicit military preponderance in the region—as reflected in war plans and exercises, the creation of new command structures, the development of critical infrastructure, the prepositioning of equipment stocks, and the acquisition of basing and overflight rights.32 Prior to 1981, the Persian Gulf had lagged behind Western Europe and Northeast Asia in the Pentagon’s hierarchy of strategic priorities. By 1989, it had pulled even. Soon thereafter, it became priority number one. The strategic reorientation that Reagan orchestrated encouraged the belief that military power could extend indefinitely America’s profligate expenditure of energy. Simply put, the United States would rely on military might to keep order in the Gulf and maintain the flow of oil, thereby mitigating the implications of American energy dependence. By the time that Reagan retired from office, this had become the basis for national security strategy in the region. Reagan himself had given this new strategy a trial run of sorts. U.S. involvement in the so-called Tanker War, now all but forgotten, provided a harbinger of things to come. As an ancillary part of their war of attrition, Iran and Iraq had begun targeting each other’s shipping in the Gulf. Attacks soon extended to neutral vessels, with each country determined to shut down its adversary’s ability to export oil. Intent on ensuring that the oil would keep flowing, Reagan reinforced the U.S. naval presence in the region. The waters of the Gulf became increasingly crowded. Then in May 1987, an Iraqi missile slammed into the frigate USS Stark, killing thirty-seven sailors. Saddam Hussein described the attack as an accident and apologized. Reagan generously accepted Saddam’s explanation and blamed Iran for the escalating violence. That same year, Washington responded favorably to a Kuwaiti request for the U.S. Navy to protect its tanker fleet. When, in the course of escort operations in April 1988, the USS Samuel B. Roberts struck an Iranian mine, suffering serious damage, Reagan upped the ante. U.S. forces began conducting attacks on Iranian warships, naval facilities, and oil platforms used for staging military operations. Iranian naval operations in the Gulf soon ceased, although not before an American warship had mistakenly shot down an Iranian commercial airliner, with the loss of nearly three hundred civilians. The Reagan administration congratulated itself on having achieved a handsome victory. For a relatively modest investment—the thirty-seven American sailors killed on the Stark were forgotten almost as quickly as the doomed passengers on Iran Air Flight 655—the United States had seemingly demonstrated its ability to keep open the world’s oil lifeline. But appearance belied a more complex reality. From the outset, Saddam Hussein had been the chief perpetrator of the Tanker War. Reagan’s principal accomplishment had been to lend Saddam a helping hand—at substantial moral cost to the United States. The president’s real achievement in the Persian Gulf was to make a down payment on an enterprise destined to consume tens of thousands of lives, many American, many others not, along with hundreds of billions of dollars—to date at least, the ultimate expression of American profligacy. Whatever their professed ideological allegiance or party preference, Reagan’s successors have all adhered to the now hallowed tradition of decrying America’s energy dependence. In 2006, this ritual reached a culminating point of sorts when George W. Bush announced, “America is addicted to oil.” Yet none of Reagan’s successors have taken any meaningful action to address this addiction. Each tacitly endorsed it, essentially acknowledging that dependence had become an integral part of American life. Like Reagan, each of his successors ignored pressing questions about the costs that dependence entailed. That Americans might shake the habit by choosing a different course remains even today a possibility that few are willing to contemplate seriously. After all, as George H. W. Bush declared in 1992, “The American way of life is not negotiable.” With nothing negotiable, dependency bred further dependency that took new and virulent forms. Each of Reagan’s successors relied increasingly on military power to sustain that way of life. The unspoken assumption has been that profligate spending on what politicians euphemistically refer to as “defense” can sustain profligate domestic consumption of energy and imported manufactures. Unprecedented military might could defer the day of reckoning indefinitely—so at least the hope went. The munificence of Reagan’s military expenditures in the 1980s created untold opportunities to test this proposition. First the elder Bush, then Bill Clinton, and finally the younger Bush wasted no time in exploiting those opportunities, even as it became ever more difficult to justify any of the military operations mounted under their direction as “defensive.” Despite Reagan’s assurances, by the end of the twentieth century, the United States did, in fact, “start fights” and seemed well on its way to making that something of a national specialty. By the time the elder George Bush replaced Reagan in January 1989, Saddam Hussein’s usefulness to the United States had already diminished. When Saddam sent his army into Kuwait in August 1990 to snatch that country’s oil wealth, he forfeited what little value he still retained in Washington’s eyes. The result was Operation Desert Storm. Not since 1898, when Commodore George Dewey’s squadron destroyed the Spanish fleet anchored in Manila Bay, have U.S. forces won such an ostensibly historic victory that yielded such ironic results. Dewey’s celebrated triumph gained him passing fame but accomplished little apart from paving the way for the United States to annex the Philippines, a strategic gaffe of the first order. By prevailing in the “Mother of All Battles,” Desert Storm commander General H. Norman Schwarzkopf repeated Dewey’s achievement. He, too, won momentary celebrity. On closer examination, however, his feat turned out to be a good deal less sparkling than advertised. Rather than serving as a definitive expression of U.S. military superiority—a show-them-who’s-boss moment—Operation Desert Storm only produced new complications and commitments. One consequence of “victory” took the form of a large, permanent, and problematic U.S. military presence in the Persian Gulf, intended to keep Saddam in his “box” and to reassure regional allies. Before Operation Desert Storm, the United States had stationed few troops in the Gulf area, preferring to keep its forces “over the horizon.” After Desert Storm, the United States became, in the eyes of many Muslims at least, an occupying force. The presence of U.S. troops in Saudi Arabia, site of Islam’s holiest shrines, became a source of particular consternation. As had been the case with Commodore Dewey, Schwarzkopf’s victory turned out to be neither as clear-cut nor as cheaply won as it first appeared. On the surface, the U.S. position in the wake of Operation Desert Storm seemed unassailable. In actuality, it was precarious. In January 1993, President Bill Clinton inherited this situation. To his credit, alone among recent presidents Clinton managed at least on occasion to balance the federal budget. With his enthusiasm for globalization, however, the forty-second president exacerbated the underlying contradictions of the American economy. Oil imports increased by more than 50 percent during the Clinton era.33 The trade imbalance nearly quadrupled.34 Gross federal debt climbed by nearly $1.5 trillion.35 During the go-go dot.com years, however, few Americans attended to such matters. In the Persian Gulf, Clinton’s efforts to shore up U.S. hegemony took the form of a “dual containment” policy targeting both Iran and Iraq. With regard to Iran, containment meant further isolating the Islamic republic diplomatically and economically in order to prevent the rebuilding of its badly depleted military forces. With regard to Saddam Hussein’s Iraq, it meant much the same, including fierce UN sanctions and a program of armed harassment. During the first year of his administration, Clinton developed a prodigious appetite for bombing and, thanks to a humiliating “Blackhawk down” failure in and retreat from Somalia, an equally sharp aversion to committing ground troops. Nowhere did Clinton’s infatuation with air power find greater application than in Iraq, which he periodically pummeled with precision-guided bombs and cruise missiles. In effect, the cease-fire that terminated Operation Desert Storm in February 1991 did not end the Persian Gulf War. After a brief pause, hostilities resumed. Over time, they intensified, with the United States conducting punitive air strikes at will. Although when it came to expending the lives of American soldiers, Clinton proved to be circumspect, he expended ordnance with abandon. During the course of his presidency, the navy and air force conducted tens of thousands of sorties into Iraqi airspace, dropped thousands of bombs, and launched hundreds of cruise missiles. Apart from turning various Iraqi military and government facilities into rubble, this cascade of pricy munitions had negligible impact. With American forces suffering not a single casualty, few Americans paid attention to what the ordnance cost or where it landed. After all, whatever the number of bombs dropped, more were always available in a seemingly inexhaustible supply. Despite these exertions, many in Washington—Republicans and Democrats, politicians and pundits—worked themselves into a frenzy over the fact that Saddam Hussein had managed to survive, when the World’s Only Superpower now wished him gone. To fevered minds, Saddam’s defiance made him an existential threat, his mere survival an unendurable insult. In 1998, the anti-Saddam lobby engineered passage through Congress of the Iraq Liberation Act, declaring it “the policy of the United States to seek to remove the Saddam Hussein regime from power in Iraq and to replace it with a democratic government.” The legislation, passed unanimously in the Senate and by a 360–38 majority in the House, authorized that the princely sum of $100 million be dedicated to that objective. On October 31, President Clinton duly signed the act into law and issued a statement embracing the cause of freedom for all Iraqis. “I categorically reject arguments that this is unattainable due to Iraq’s history or its ethnic or sectarian make-up,” the president said. “Iraqis deserve and desire freedom like everyone else.” All of this—both the gratuitous air war and the preposterously frivolous legislation—amounted to theater. Reality on the ground was another matter. A crushing sanctions regime authorized by the UN, but imposed by the United States and its allies, complicated Saddam’s life and limited the funds available from Iraqi oil, but primarily had the effect of making the wretched existence of the average Iraqi more wretched still. A 1996 UNICEF report estimated that up to half a million Iraqi children had died as a result of the sanctions. Asked to comment, U.S. Ambassador to the United Nations Madeleine Albright did not even question the figure. Instead, she replied, “I think this is a very hard choice, but the price—we think the price is worth it.” No doubt Albright regretted her obtuse remark. Yet it captured something essential about U.S. policy in the Persian Gulf at a time when confidence in American power had reached its acme. In effect, the United States had forged a partnership with Saddam in imposing massive suffering on the Iraqi people. Yet as long as Americans at home were experiencing a decade of plenty—during the Clinton era, consumers enjoyed low gas prices and gorged themselves on cheap Asian imports—the price that others might be paying didn’t much matter. Bill Clinton’s Iraq policy was both strategically misguided and morally indefensible—as ill-advised as John Kennedy’s campaign of subversion and sabotage directed against Cuba in the 1960s, as reprehensible as Richard Nixon’s illegal bombing of Laos and Cambodia in the late 1960s and 1970s. Yet unlike those actions, which occurred in secret, U.S. policy toward Iraq in the 1990s unfolded in full view of the American people. To say that the policy commanded enthusiastic popular support would be to grossly overstate the case. Yet few Americans strenuously objected—to the bombing, to congressional posturing, or to the brutal sanctions. Paying next to no attention, the great majority quietly acquiesced and thus became complicit.

American Freedom, Iraqi Freedom